Now we have come to the machine learning way of mining opinions aka sentiment analysis. Here is brief background on Machine Learning:

Machine learning (ML) is a subset of Artificial Intelligence (AI). This field refers to training machines in doing certain tasks that they get better at, over time.

At a higher level, ML has three subtypes: Supervised, Unsupervised and Reinforcement learning.

In the supervised machine learning technique, the machines are trained on mathematical models with the input data that is already labelled. Once trained the model is then tested against more data (not the one that is used in training) and this time around the model generates the labels (also known as predictions). The lesser the difference between correct and incorrect predictions, the better, for obvious reasons. Generally, ML models can be better trained on a relatively bigger corpus of data. Mathematical representation is as follows:

- Y=f(X)

- X: input

- Y: label/prediction

Note: In the interest of keeping this blog concise, I am not getting into the details of the remaining machine learning techniques which I will be covering in future blogs

Further expanding the Supervised section here:

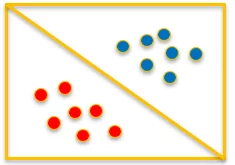

- Classification: When the expected outcome is a “definite” value which is known as a category/class. Examples: Spam or ham, black or white, positive or negative, colors (red, green, blue) etc.

- Regression: When the expected outcome is real or continuous. Examples: stock predictions, forecasting, age prediction, salary prediction. Notice, all these predictions are based on multiple factors, e.g. if the expectation is to predict the price of the house that it can be based on multiple factor such as number of rooms, area/sq ft, location and the sale purchase history of that location etc.

Sentiment analysis falls into the former, i.e. classification

In some cases, regression can also become a form of classification. For some experts, there is not “Classification” subtype. Example: Regression classifiers produce scores and classification is can be applied as the category on the given range of scores. Example:

- Regression to produce a score between 0…1.

- Range: 0.1…0.3: Negative

- Range: 0.4…0.7: Neutral

- Range: 0.7…1.0: Positive

Let’s go over these steps, one after the other.

- Information Collection: Getting the corpus of data that will be used to train and test the model aka the classifier. Example: movie or product data that is already labelled (more on this later)

- Preprocessing text: Cleaning it out by removing stop words (words that do not help in the mining of the text itself as they do not carry the meaning all by themselves. Ex: ‘the’, ‘they’, ‘is’, ‘at’ etc.), numbers, punctuations, html tags etc. The remaining words are also lemmatized in this step

- Features: Converting the textual words to their numeric representation (vectorizing text). This step is needed for training ML models. Simply put machines don’t understand text, they get the numbers.

- Training: Transformed data at this stage is split into training and testing sets. The training set is used to train the ML classifier by providing both the features and labels as inputs. There are multiple classifiers that can be experimented with and they are available in toolkits and libraries (such as scikit-learn in Python), out-of-the-box. The key point here is “experimentation”; there is no one-size-fits-all algorithm available. Some classifiers are good with sentences, some are better with words etc. The performance of the given classifier is also dependent upon how the given vectors were created in the first place (step 3). Some common classification algorithms are: Naïve Bayes, Support Vector Machine and Decision Tree Classifier, Deep Learning (neural nets).

- Testing: Once trained, the model is then tested with the testing set (generally the data is split 80:20, 80% for training and 20% for testing the model but there is no hard and fast rule, you can change the split size based on your requirement). In this phase, only the features are provided, and the classifier produces the label (classification). If you can achieve a satisfactory level of accuracy (the difference between the predicted classification and the correct one), then move to the next step otherwise keep improving (by moving back and forth between steps: 4-5, sometimes step 3 also needs to be tinkered with).

- Making Predications: After the successful testing of the model, it is time for it to be deployed and it starts making predictions every time it is given a movie review

Note: its important to keep your model trained over time with the datasets that contain new problem statements, for it to remain acceptably accurate.

- ML is dynamic in nature unlike the rules-based lexicon which is tuned to follow static predefined rules. Putting it another way, there is an opportunity for training the model to “learn” from the provided dataset

- There is no SME (Subject Matter Expert) required to create the rules for the ML based approach. This is tied to the point above

- The prediction accuracy of the ML model is completely dependent upon the quality of the data provided for training in the first place. Generally, the size of the corpus also matters, the bigger the size (meaning the more the problem statements) the better the model is trained to produce accurate predictions. Not true in the lexicon’s case.

- In the absence of the rules-based parser for the given situation, the use of the machine learning approach becomes a viable option

- ML based solutions in general are easier to scale but are difficult to debug

- Context can be confusing for lexicons because they are not designed to “learn” from data, this is where ML excels

If you have any question or queries, do not hesitate to reach out to us!

1 thought on “Sentiment Analysis – Machine Learning Classification Use Case”

Comments are closed.